Book Review: Designing Data-Intensive Applications

Data is the king. The purpose of the software that we build is to operate on data. But doing that efficiently and reliably at scale is hard. ‘Designing Data-Intensive Applications’ attempts to give you knowledge and tools to do that.

Can You Handle Your Data?

If you try to look at a software industry from 5,000 feet, what are you likely to see? Lots of code, right? What else? A lot of data. Huge volumes of data. It does not matter what you do as a software engineer - most likely you are handling data of some sort. And the sheer volume of that data is getting bigger every day.

As the volume of data grows, requirements for data processing systems grow as well. It is no longer sufficient to store data in a file or even in a database. Often, it is not even sufficient to process data on a single computer!

Various tools have been developed to facilitate data processing. SQL databases, NoSQL databases, graph databases, map-reduce systems, and many others. How do you use them effectively? How do you do it at scale? When your system is distributed? And when it needs to be 100% reliable? It is no easy task. You need to have an in-depth understanding of what is going on under the hood of those tools to be able to make good design decisions.

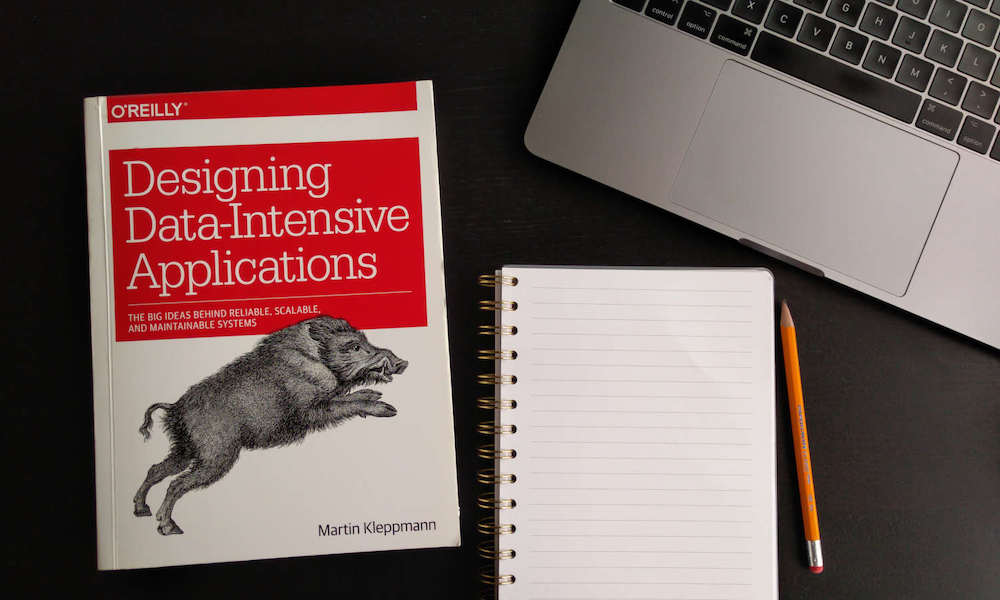

Martin Kleppmann is a researcher of distributed systems at the University of Cambridge, UK. He is also a software engineer who built big data processing systems. His book ‘Designing Data-Intensive Applications’ gives in-depth look at what does it take to build data-intensive systems.

What can you learn

- How to build the simplest database engine in just a few lines of bash code

- What are the fundamental ideas and approaches behind different database types (SQL, NoSQL, etc)

- How to build reliable distributed systems for data processing

- What are the problems with such systems and how to solve them

- What existing data processing tools and approaches exist and how you can use them.

What’s Inside

The books deliver knowledge from the ground up.

First, the author talks about how data is laid out at a low level: how database engines store data on a disk. The book goes into details about writing and reading data, different ways to index data. There’s plenty of information about different approaches for database organization (row-based or column-based storage, key-value storage, etc), what they excel at and why.

Next, the author talks about data in a distributed environment. The book goes in-depth about replication and partitioning, transactions and consistency, how those things affect or contradict each other. The book provides details of how to take into account unreliable networks, clock mismatches, and many other things when building distributed data processing system.

Finally, the book discusses how all those technologies and approaches are used in real life. You can learn about batch and stream data processing, about details of map-reduce technique, what they can and can’t do. The book concludes with the author’s view of the future of data systems and data processing ethics.

The book is filled with examples and detailed explanations. It is very dense, like a 2-year university course compressed into a single book. What I personally liked about this book, is that it provides so much knowledge that is easy to apply, it goes into intricate details of processing large volumes of data, yet keeps perspective on the high-level picture.

Summary

Software designs and requirements change all the time, but fundamental principles do not. I think that this book is a great way to learn fundamental principles that allow building reliable distributed systems to process data at scale. It is not an easy read, not something you can go through fast or remember in great detail. But I feel it is a book that has an important place on a software engineer’s bookshelf. I can be both great learning material and a reliable reference for the future.